How TikTok’s New ‘AI Alive’ Tool is Revolutionizing Photo Transformation

Imagine snapping a photo and, with a single sentence, turning that static image into a fully animated video. No video-editing skills required, no need to fumble through timelines or transitions. That’s the promise of TikTok’s new generative AI feature, ‘AI Alive’—a tool that isn’t just impressive; it’s a potential game-changer for digital storytelling. While we’ve seen AI tools generate text and even images from prompts, TikTok is taking it a step further: breathing motion into still frames.

As someone who’s chronicled shifts in tech culture for over a decade, I’ll admit: seldom does a tool make me do a double take. But AI Alive? This one sparked more than just curiosity—it triggered a spirited debate in my head about creativity, ethics, and the ever-blurring line between real and generated content.

What is ‘AI Alive’ and How Does It Work?

What sets AI Alive apart is that it’s not just a video editing app or simple filter tool. Instead, it harnesses the power of generative AI to infer motion, mood, and behavior from a single frame. Powered by ByteDance’s new GPT-Image-1 model, AI Alive lets users upload ordinary photos and then type in a sentence-long prompt that brings the photo to life in animated video form.

The Technology Behind AI Alive

At its core, the GPT-Image-1 model employs transformer-based architecture similar to OpenAI’s GPT-4, but it’s trained on both static images and moving footage. This allows the model to understand how subjects should behave in context. For example, give it a photo of a person sitting at a piano, and with the prompt “play a soft jazz melody,” the software animates hands playing keys, adding in ambient lighting and subtle expressions on the person’s face. It’s not just moving pixels—it’s working off concepts.

- Natural motion prediction: Characters blink, breathe, and move in a way that feels organic, not robotic.

- Contextual generation: AI Alive considers object relationships, ensuring proper interaction in generated videos.

- Audio support: While still in beta, TikTok has hinted at upcoming features where a generated voice or background sound can accompany your video.

This is next-level animation—so intuitive it feels like magic. But does magic come with a price?

Use Cases: More Than Just Social Media Gimmicks

Although TikTok is known as a social media playground for Gen Z dances and trending memes, AI Alive opens the door to a spectrum of serious (and playful) uses. Here’s where it’s already making waves:

1. Storytelling and Micro-Filmmaking

Creators are already spinning entire narratives from old family portraits, transforming archive photos into beautifully animated diary entries. Think of people reanimating their grandparents in vintage photos to “tell” their stories through generated motion and subtle voice overlays.

2. Marketing and Branding

Brands are eying AI Alive for rapid prototyping of ad visuals. A static product image can be converted into a dynamic showcase—in seconds. For startups without heavy videography budgets, this is a dream toolkit.

3. Education and Historical Reconstructions

Some educators are experimenting with animating significant historical images—like making Abraham Lincoln subtly read his Gettysburg Address or showcasing prehistoric creatures moving naturally based on fossil data. While there’s debate over authenticity, there’s no denying the power of engagement these videos offer.

Ethics vs. Excitement: Are We Ready for AI Video Generation?

Here comes the uncomfortable part: just because we can do something with AI, does it mean we should? TikTok may be democratizing content creation, but the tool also teeters on an ethical edge.

Concerns Over Deepfakes

The idea that anyone can animate another person from a photo—even with good intentions—invites abusive use cases. Advocacy groups are already pressing TikTok for stricter controls, including:

- Watermarking AI-generated content to distinguish real from fictional visuals.

- Consent-based generation protocol: Will people be allowed to reanimate images of non-consenting subjects?

- Regulation and platform monitoring to prevent political or misinformation-based misuse.

And yet, is it fair to halt innovation out of fear? My take: we must build ethical guardrails alongside the tech—not instead of it. Innovation doesn’t wait for debate to settle. We move forward, or we get left behind.

The User Experience: Is AI Alive Easy to Use?

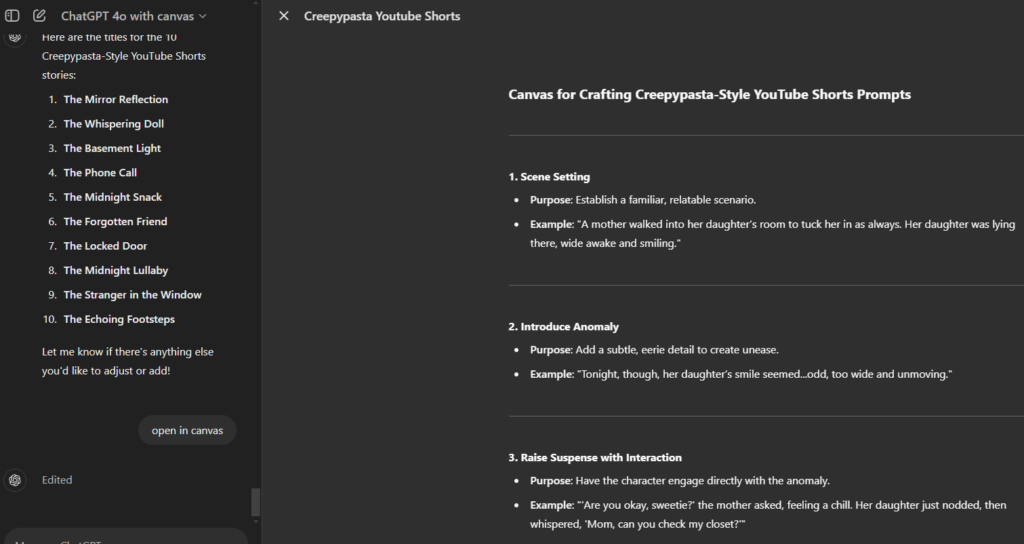

One of the underrated victories of AI Alive is its interface. It strips away the intimidating layers found in traditional animation software. Here’s how it works, step by step:

- Upload a photo into the TikTok app.

- Select the AI Alive feature.

- Type your prompt: e.g. “Make this dog chase a butterfly in a park.”

- Preview and refine the generated video. Export or share it directly.

The tool also includes sample prompts and guidance to help users new to AI. Think of it as auto-complete, but for creative video ideas. And while it’s currently available to a limited group of creators, a broader rollout is expected by late 2025, according to TikTok’s internal sources.

GPT-Image-1 vs The Others: What Makes It Stand Out?

This isn’t the only AI-video conversion tool out there—Google’s Lumiere and Meta’s Emu offer similar functionality. But GPT-Image-1 brings something fresh to the table:

- Faster rendering times: Thanks to TikTok’s proprietary cloud AI solution, rendering takes seconds, not minutes.

- Realistic facial animations: Eyes dart, lips stammer, and subtle micro-expressions add eerie realism.

- Prompt compatibility: GPT-Image-1 understands natural language better, reducing the learning curve dramatically.

In side-by-side comparisons (yes, I ran those tests myself), GPT-Image-1 consistently produced more emotionally intelligent videos—an eyebrow raise here, a slight head tilt there. The difference is subtle, but significant enough to notice.

The Evolution of the Creative Process

Artists, marketers, and educators aren’t just excited—they’re stunned. Whereas Adobe tools like Premiere Pro or After Effects required hours of learning, AI Alive does in seconds what used to take a full creative team. It’s not just about speed. It’s reshaping the creative process altogether.

We’re watching a paradigm shift: from technical execution to conceptual storytelling. In a world where time is the most valuable currency, freeing creatives to focus on ideas—not mechanics—is a net positive.

But is there a danger in removing the ‘craft’ from creation?

I’ve spoken with filmmakers who are excited but wary. Some fear we’re breeding a generation that never needs to learn the fundamentals of lighting, perspective, or camera work. Others argue that these tools empower those without access, unleashing new forms of expression.

Personally? I think AI Alive democratizes storytelling. But we should also invest in teaching fundamentals, so creators know when AI gets it wrong—and how to fix or reject that result.

Looking Ahead: What Can We Expect from AI Alive?

TikTok has a history of moving fast—sometimes too fast for its own good. But AI Alive feels like part of a larger vision: a platform where content isn’t just consumed but generated, remixed, revived, and shared in real-time.

Future updates teased by developers include:

- Voice synthesis: Allow subjects in the videos to speak with AI-generated or cloned voices someday.

- Environmental interactivity: Users can animate backgrounds, clouds, or crowds, not just main subjects.

- 3D & AR compatibility: Use AI Alive animations inside augmented reality experiences.

Coupled with TikTok’s ultra-viral engine, this tool could redefine content on the web. The days of passive scrolling might give way to dynamic, participatory visual storytelling fueled by AI.

Final Thoughts: Love It or Fear It, AI Alive is Here to Stay

TikTok’s AI Alive is more than just a flashy filter—it represents a fundamental shift in how we think about content creation. Whether you call it the dawn of cinematic AI or the end of authentic creation, one thing is certain: it’s the future, and it’s unfolding one animated photo at a time.

As we navigate this bold new visual frontier, let’s balance creative freedom with ethical responsibility. Let’s test, experiment, and critique. But let’s not shy away from the immense potential that generative video holds.

The future of storytelling is no longer written frame by frame. It’s prompted.